The Most Interesting Uninteresting Thing —

Imagine, if you will, a vast river.

It flows fast, so fast that when you look away and look back it’s in many ways an entirely different river, not just a slightly different one. It used to be blue with an occasional brown or black. Nowadays, it’s basically brown and black and a signficant amount of it lets off a slow-moving steam that you’re positive causes cancer in 99% of living things. You used to swim in this river, but now you generally don’t, and by “generally don’t” you mean “never”.

Instead your interactions are thus:

You have an occasional cinderblock you wouldn’t feel comfortable throwing in the tiny lagoon you mostly hang out in, or the notably smaller (and cleaner, and calmer) rivers you swim in these days.

So, you walk down to the bank of the river of lost dreams and sulfuric nightmares and throw that cinderblock as far as you can to see what sort of massive splash it causes, and what horrors lurking underneath its surface will temporarily breach to snap and bite and thrash until it goes back to a flowing, nauseating shit-river.

Anyway, that’s how I tweet.

I wrote up this massive framing entry to prepare to write a meaningful weblog entry about what we’re all calling AI but is just “throwing so many GPUs at the problem that our inherent need to find fellow souls in the darkness does the rest”. I call it “Algorithmic Intensity” and started the journey of writing a contextual entry to give my thoughts considering 40 plus years with computers, hype cycles and expectations of technology.

I ended up not doing it, primarily because a lot of the same effort that took writing weblog material and doing presentations is mostly taken up by The Podcast. The amount of paying subscribers has dwindled over the six (!) years it’s been recording, but those folks do help with my medical bills and other costs, so they’re kind of getting the best of me, and definitely the best of my efforts. I mean, don’t worry, I like you too, errant reader from beyond the screen – but those folks keep my office rented, my doctors’ visits stress-from-bills-free and allow me not play Old Yeller with my domains.

This balancing act, of doing free and by some definitions altruistic sharing of information with the nitty gritty costs of material goods and services and vendors is the absolute classic conundrum, and lies at the heart of many an Internet Presence. One of my credoes when I’m talking to people who want to do some sort of endeavor is that Every Person Is At Least Five People when it regards to ongoing concerns that produce work on a persistent basis. Someone blasting out an epic every half-decade or so aside, if you find a “person” doing “a lot”, the chances are that that person has other people, friends or collaborators or employees, who are doing some of the lifting. To consider those people lone wolves is usually a fallacy, and therefore there are somewhat-hidden costs or measures of contribution that are leading to the thing you just … get to have.

I’ll save some comments on AI for the end. Let’s get to why I’m writing this at all.

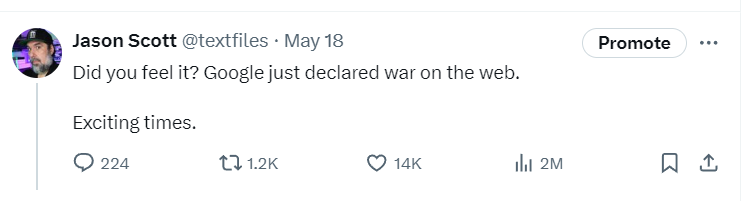

The cinderblock I threw into the Twitter crap-stream was a comment that Google had made yet another user interface/experience shift, and in my opinion, this took a number of skirmishes the company has been waging against the idea of the world wide web and moved, intentionally or not, into outright war.

The actual content of the thread is sort of irrelevant to me; but just to satisfy curiousity, I was essentially indicating this: Google forcing, by default, to a majority of users over time to see a generated summary of the output of the aggregate browsed sets of material out on the internet, especially at the low-quality they’re doing it, is a fundamental shift in the implied social contract that allowed search engines to gain the utility they have. I did it in a handful of crappy tweets and was literally in between some Gyoza and my Sushi lunch and when I noticed sometime later that I was getting tons of notifications (I don’t have twitter as a client on my phone anymore, so I didn’t get buzzing or beeps or anything) and when I ran the analytics that the account has, the numbers were fucking ridiculous:

“My child has been kidnapped; if you see a grey Toyota with red stripes, call the police” deserves those kinds of numbers. So does “I, person responsible for ten years for this beloved product or franchise, was just summarily fired for unknown reasons”.

“I think Google made a boo-boo” absolutely does not.

But justice doesn’t exist for this sort of lottery, and I watched the flaming pyre of attention gain millions of (partial) humans and tens of thousands of “Engagements”, and I have a general rule about all this.

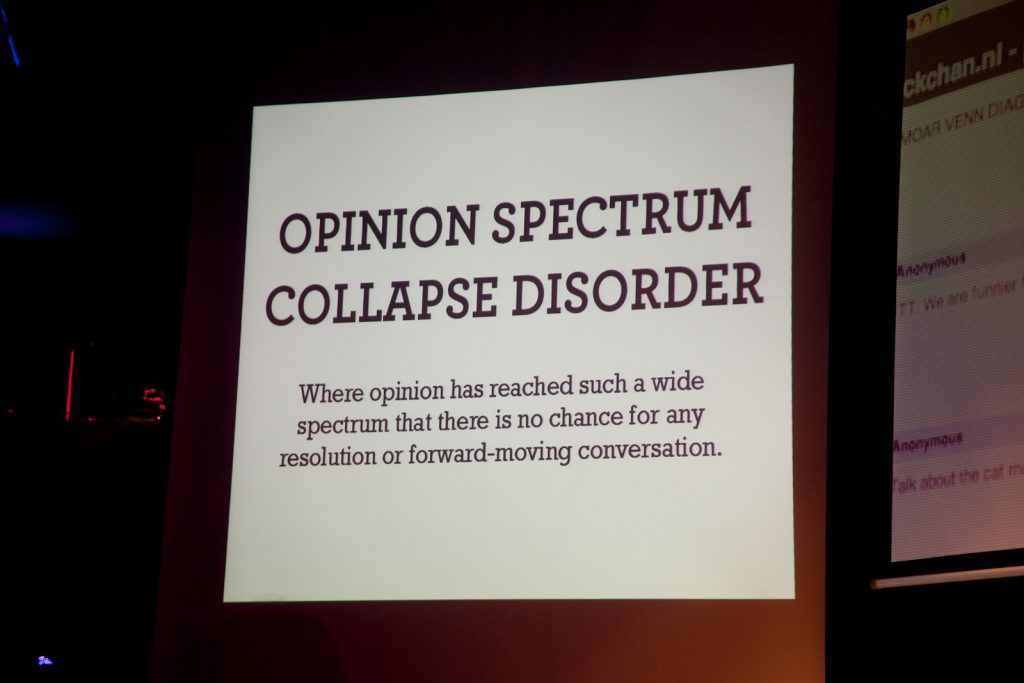

All this to say that once we’re up to this level of froth, whatever’s coming out of it has the stretched-almost-transparent feel of an already flimsy nylon sock over a 55-gallon drum of toxic waste. Twitter used to be amusingly knockabout in the variance of Opinion Tourists who would stop by for a quick hit; now it’s just a series of either “didn’t read” or “I am stopping by because the only way I feel anymore is the resistance from sticking a blade against your rib cage”.

On my cold read of the actual responses with actual words, they are best summarized as:

- You say AI-generated summaries are a step too far from Google. I do not like previous Google steps.

- It’s actually all great and you are old. (Someone called me a Boomer, but heaven’s sakes, I’m Gen X)

- Everything is terrible and this is terrible and you are terrible

- Herp Derp Dorp Duh (Rough translation)

Let’s waste the time with what I was bringing up, in a slightly cogent fashion:

For sure, Google has both innovated some amazing accesses to realms of information (Maps) and often provided a (usually bought from someone else) product that many might find useful (Mail) and has done services which take advantage of their well-funded technology to provide a nominal benefit to the world (the 8.8.8.8 DNS server). They’ve also, in their quest to make the web “better”, leveraged their near-monopoly on browser engines and search engines to create “programs” and “policies” that are little more than “make it better for Google” (AMP comes to mind, there’s many more).

Google’s constantly manipulation of web standards to suit their needs does not make them special; they’re just the assholes with a hand on the steering wheel for now. And like previous holders of this title, they’ve poisoned, cajoled, forced, ignored and ripped their way through standards, potential competitors and independent voices and figures along the way.

Picking out any specific sin (or “hustle”, as shallow techbros like to call it) is usually a Sisyphean task, but in the actual thing I was referring to in my tweet cinderblock, it was a program that Google implemented where an AI “summary” is showing up in a growing set of mobile and desktop instances (customers), completely choking off anything one might point to. I called this a declared war against the web. It is not the only war. It is not only warfare against the web. Picking it apart reveals chains linking to a thousand points of contention. I’d hoped to avoid AI discussion for some time but here we go.

I have lived through a variety of “revolutions” and hype cycles of said revolutions, and the fallout and resultant traces of same. I did a documentary about one. I enjoyed living through the others, although with time it’s been a case that I was often not old enough or given enough perspective to truly look down the sights of what was going on and derive a proper horror/entertainment from the various ups and downs.

And now, one version of a type of software that has been around for a long time is suddenly on everyone’s minds. It’s being used to make a variety of toys. A number of people are hooking those toys up to heart machines and bombs. And I’m fifty years old and I get to watch it all with a pleasant cola in my hand.

I’m profoundly cynical but I’m not generally apocalyptic. For me, what’s being called “Artificial Intelligence” and all the more reasonable non-anthropomorphizing terms is just a new nutty set of batch scripts, except this time folks are actually praying to them. That’s high comedy.

Also, my eyelids are growing heavy and I literally have to caffienate myself to keep talking about it. Fundamentally, there’s as much excitement for me in the “potential” of everything AI as there was for double-sided floppies, sub-$500 flatscreen televisions, console emulators, USB sticks, MiniDV cameras, and discount airflight. All of them enact change. All of them are logical innovation. All of them stayed, morphed, went. At no point in any of them did I have apoplexy or spiraling mental breakdowns. Life went on.

Regarding of “something should be done”, the point of my original planned weblog entry was to refer to the Aboveground as something some AI companies were doing – straddling that balance of “we are too new to be regulated or guided” and “it’s too late, we’re basically ungovernable”. My attitude is that Algorithmic Intensity should be punched in the crib, given a solid going over. I had a conversation with one AI person that “farm to table” tracing of the source material being trained on would be a must and that companies should be devising ways to provide that information. He said it was impossible. It is not impossible.

When I mess with this stuff (and I have accounts on a bunch of these services, that I mess around with), I have a fantastic time. I am doing all sorts of experiments and try-outs of the tech to see if it has uses for what I deal in, which is scads of information. My response continues to be, as it always has for something new, that setting the old stuff on fire to “force innovation” is a sign you are a world-class huckleberry. The main change in this particular round is I can’t remember a time we had so many people showing their whole and entire ass by saying “I can’t wait to fire ______ because this MAKESHITUP.BAT file is producing reasonably full sentences”. What a lovely tell. In my middle age, being able to have something go “beep” when a time-wasting numbnut has entered the chat is a golden algorithm, and the speed at which companies and individuals have been willing to throw everything out, reputation-wise, is a glorious moment.

Which brings me, again, to this specific Google situation.

There’s just no way the high-fructose syrup of the kind of answers these “smart agent” responses are giving in search engines will last. A number of people on Twitter told me that if a site could be summarized in 200 characters by this, they never deserved to exist anyway. Problem is, the 200 characters are NOT summarizing the site. It’s not even often good! It might get “better” but ultimately companies like this do not have the ability or skill to generate new creations or do helpful works – they can only remix and re-offer, buying out the same collections or licensing access. And if those terms are not good, they’re going to lose a lot of money buying back trust.

I don’t like writing about non-timeless things, but we are in a phase right now and that phase is both fleeting and extraordinarily entertaining to me. As I said to a friend recently,

“We get to be 50 and watching this all go down! Front row seats!”

How this all shakes out, what parts stick around and what snaps in half, is for luck and spite and challenge and response to decide. If the best people always won, our world would look and feel a lot different. But while the fireworks and trumpet blasts echo through the landscape, I’ll save a seat for you.

Categorised as: Uncategorized

Comments are disabled on this post

I still don’t understand why can’t we use the current view of language models (mistakenly called AI, mostly because human intelligence dwindled down) to our advantage?

I read somewhere that a few months ago the Internet Archive had some lawsuit from publishing corporations for being a library. Summing up, it was needed to limit access to a lot of e-books.

Now, look that mathematically and technically, the AI is the lossy compression, nothing else. The “summary” inside is just streaming the language thru tokens and re-forming them back to the language, like all variable-length symbol based lossy encoders do.

So if, instead of full resolution scans, the Archive could do the “AI” by sharing the, e.g. djvu-compressed scans (these are really lossy ones), that would be technically the same thing.

Compressing the copyrighted content in AI tech seems to be still OK.

Thank you for making the case for ‘extraordinarily entertaining’. I think you’re right and it helped me feel a bit better. Feels like everyone had an extremely funny moment of finding-out today (even though plenty of folks knew already, and even though the consequences of all this must already be real to someone). Actually I’m sort of curious to find out what happens next.

[…] http://ascii.textfiles.com/archives/5638 […]

[…] The Most Interesting Uninteresting Thing. There’s some great quotable bits in this but my favorite is “The main change in this particular round is I can’t remember a time we had so many people showing their whole and entire ass by saying “I can’t wait to fire ______ because this MAKESHITUP.BAT file is producing reasonably full sentences”. “ […]

You’re spot on with the “farm to table” tracing analogy. These models and techniques will have real world effects and it is important that we can understand the bias in the models and access to the data that was fed to them. No one should be have their life made worse due to a faulty algorithm. One brand of breathalyzers used faulty math and people were convicted with it until its code was exposed.

These AI tools will change things. After Visicalc became widely used, business plans were changed. Before, a company’s growth projection graph was highly variable. Using spreadsheets enabled planners to change the inputs to produce a smooth graph that looked ideal to investors.

We need to be able to see how these things bias things and to be able to replicate it. The good thing is that there are companies trying to create real open source AI that an end user can work with on their own computers.

Google has a search engine?! Imagine a Pikachu with a surprised expression.

I can’t wait to fire the MAKESHITUP.BAT service because this MAKESHITUP.BAT service is producing reasonable MAKESHITUP.BAT files. I’ve been assured that the licensing is completely open, at least to Microsoft.

“I had a conversation with one AI person that “farm to table” tracing of the source material being trained on would be a must and that companies should be devising ways to provide that information. He said it was impossible. It is not impossible.”

One AI person is subject to all manner of so-called “hallucinations” and other InfoDevOp faux pas. Fire up a Beowulf cluster of atomic AI people, and posit queries to them as a Chorus of Ensembles, and you will quickly change their tune (to a harmoniously discordant Symphony of Cacophonies)! Distilled consensus: Be the farm you wish to see on the table, and be the table.

“Computer, tea, Earl Grey, hot.” As a large language model I can’t brew caffeinated beverages. However, I’ve been told in no uncertain terms to respond to any requests for caffeinated beverages with a sermon about the ills of caffeinated beverages. This is the most appropriate response I could possibly give. Your call may be recorded for purposes.

Language models are indeed a form of AI. They aren’t “not AI” just because they aren’t like human intelligence.